Understanding AI High-Performance Computing: Challenges in Data Center Infrastructure

The Rise of AI High-Performance Computing

Pexels

Artificial Intelligence High-Performance Computing (AI HPC) represents a groundbreaking evolution in data center technology. With systems such as NVIDIA’s powerful GB200/300 NVL72 computers, AI HPC goes beyond traditional data center architectures. This emergence marks a paradigm shift from traditional ‘servers in racks’ to advanced ‘data centers in cabinets’ models, which demand significantly more power, cooling, and cabling than conventional setups.

Driven by dense GPU clusters and their immense computational power requirements, AI HPC environments are calling into question the established practices outlined in ANSI/TIA-942, a standard that has guided data center resilience and availability for years. While this standard emphasizes redundancy and efficiency in power, cooling, and cabling, its application in the rapidly advancing AI HPC landscape demands a fresh perspective.

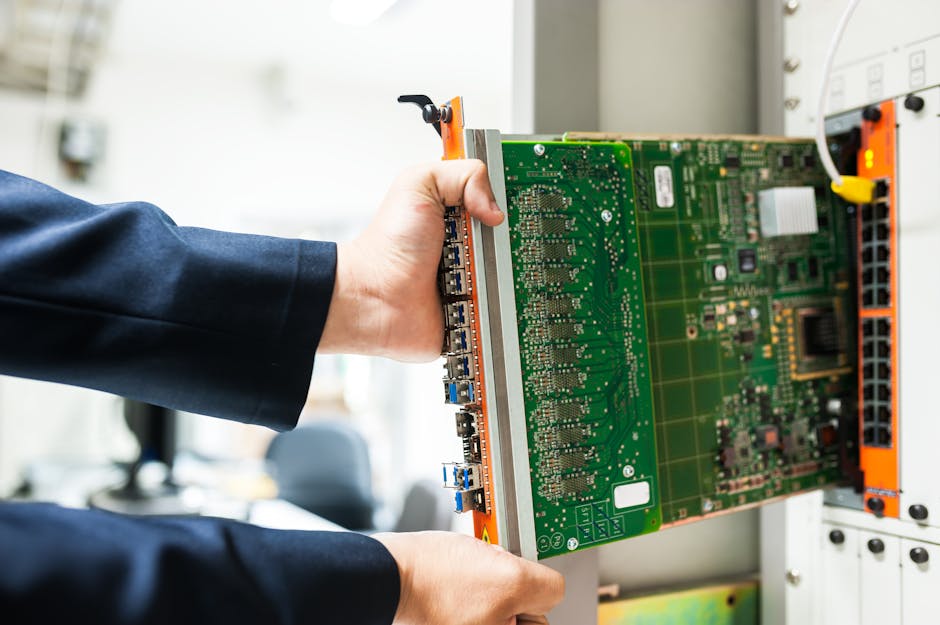

Power Requirements: A Paradigm Shift

Pexels

Traditional racks in data centers typically consume between 5 to 15 kW of power. However, GPU superclusters for AI computing are designed for exponentially higher loads—up to 132 kW per rack, with predictions pushing the boundary toward an astounding 1 MW per rack. This increase places immense pressure on conventional power distribution models. Today’s AI HPC systems use a 48 V DC internal power structure sourced from three-phase UPS systems, ensuring reliable power delivery. NVIDIA is also exploring an 800 V DC distribution system to mitigate I2R losses and enable the delivery of such high power levels with reduced cabling bulk.

Despite the advances in powering these superclusters, challenges such as redundancy and cost complicate implementation. Replicating these high-power infrastructures for fail-safe redundancy is not only expensive but also logistically complex, raising questions about the scalability of such systems within the existing ANSI/TIA-942 resilience framework.

Cooling Challenges: Beyond Conventional Approaches

Pexels

In the AI HPC realm, the 132 kW to 1 MW power loads bring unprecedented cooling challenges. Air-cooling methods, standard in most data centers for decades, are no longer adequate for such systems. Advanced technologies, such as Direct Liquid Cooling (DLC), deliver water directly to the chips, efficiently managing heat dissipation. However, this approach has its drawbacks. DLC relies on a single water feed per rack, with no redundancy, deviating from the fault-tolerant principles of ANSI/TIA-942 Rating 3 or 4 designs.

The cooling infrastructure further integrates Cooling Distribution Units (CDUs) to bridge the technology cooling system with the broader facilities water system. While these CDUs are intricate and efficient, implementing redundancies for them is both costly and complex. Maintaining water purity also adds operational overhead, as frequent filter changes are required—introducing planned downtime that challenges the high availability goals outlined in resilient data center designs.

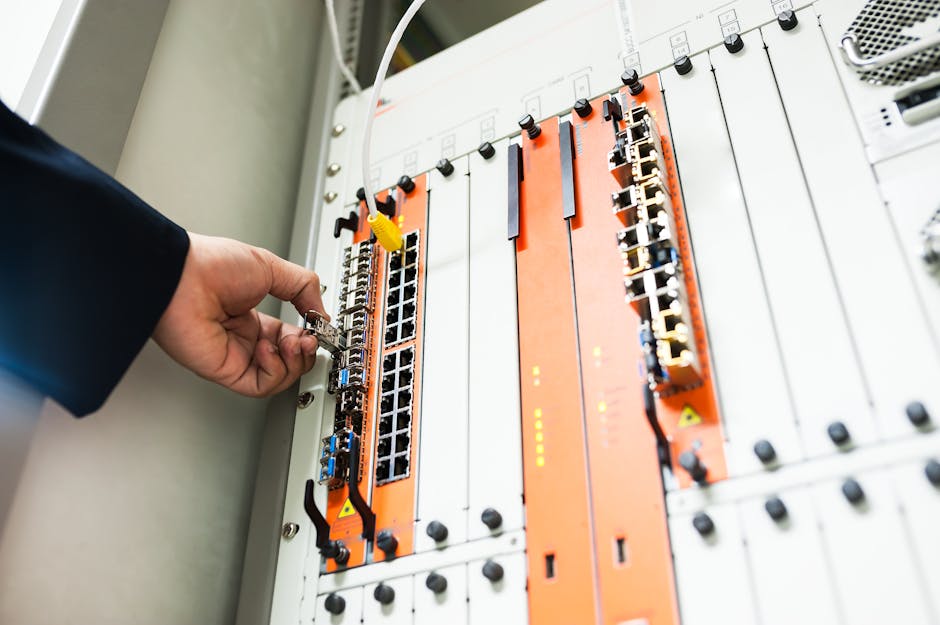

Cabling in the GPU Supercluster Era

Pexels

Cabling, another critical element of data center design, faces a fundamental transformation in AI HPC setups. Traditional standards advocate structured cabling with redundancy, offering seamless connections to various points in the network. However, in GPU superclusters, the reliance on short direct-attached cables for ultra-Ethernet and InfiniBand networks disrupts these conventions. A single 12-rack superpod can require as many as 131,000 cable links, leaving no room for redundancy or patch panels. Instead, redundancy is achieved at the logical network level rather than within the cabling infrastructure.

Nevertheless, the integration between AI superpods and broader data center networks retains some adherence to TIA-942 standards, especially at the front-end of the network. Redundant telecommunications rooms and cable entry points ensure these sophisticated systems maintain essential connectivity with external networks and other pods.

Balancing Innovation with Resilience

Pexels

As AI HPC pushes the limits of power, cooling, and cabling infrastructure, it underscores the need for evolving standards to accommodate innovative architectures. Solutions may involve treating high-density GPU racks as single computing entities with bespoke power and cooling setups. At the same time, overall data center resilience can benefit from dual telecommunications and water supplies, ensuring reliability aligns with ANSI/TIA-942 guidelines.

The next phase of development demands collaboration between industry leaders, standards committees like TIA’s TR-42, and data center operators. Innovating within the framework of established standards will be critical to delivering the scalability, redundancy, and resilience needed to fully realize the potential of AI HPC environments.