AI at Scale: Why Infrastructure, Not Intelligence, Will Define the Winners

AI is entering a new chapter, where infrastructure – not smarter algorithms – will determine its future impact. According to a report from RCRTech, the focus has shifted to operational execution as the race to scale artificial intelligence deepens. Energy systems, network capacity, data governance, and cost efficiency have emerged as critical factors in deploying AI technologies at scale, with implications for telcos, cloud providers, and policymakers alike.

The New AI Bottlenecks: Power, Networks, and Governance

The report argues that as AI systems graduate from pilot projects to full-scale production environments, foundational constraints once overlooked—such as energy use, network reliability, and latency demands—are now coming to the forefront. Unlike traditional software applications, AI workloads are infrastructure-heavy, requiring precise orchestration of compute resources across on-premises, edge, and cloud environments. This operational shift marks a departure from the cloud-first assumptions of the past decade, driven by heightened concerns over sustainability, cost inflation, and geopolitical regulation.

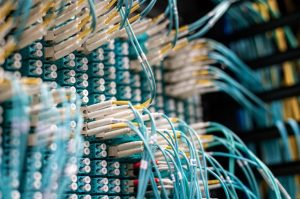

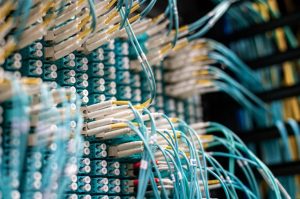

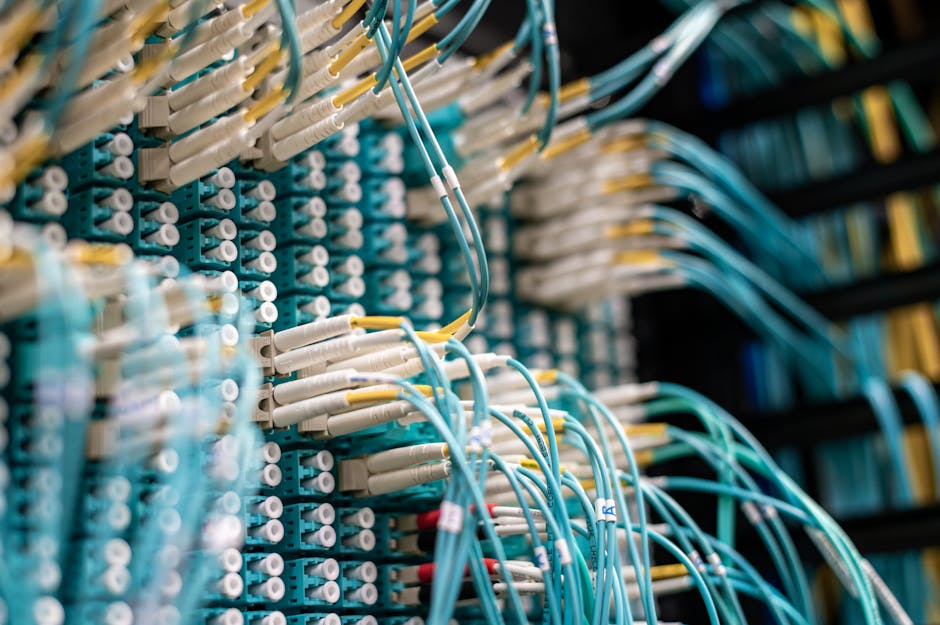

For telecom operators, this convergence of AI and industrial infrastructure presents both opportunities and challenges. High-capacity, low-latency networks are essential for enabling AI workloads at scale, particularly in hybrid and edge computing models where telcos are well-positioned to play a critical role. However, the industry faces intensifying competition from hyperscale cloud providers and an urgent need to adapt legacy network infrastructure to AI’s unique demands.

Market Context: Why AI Infrastructure Matters for Telcos

Industry observers note that AI’s movement from innovation to industrial utility reshapes expectations across the telecom ecosystem. With global AI spending expected to exceed $300 billion by 2026, according to IDC, the foundational role of telecom infrastructure is increasingly evident. As providers grapple with bandwidth-intensive AI traffic, particularly for real-time applications requiring ultra-low latency, the race to modernize fiber and 5G networks has taken on new urgency. Equally significant is the growing regulatory scrutiny over AI data handling, which incentivizes telcos to establish themselves as trusted intermediaries for secure, compliant data transmission.

This shift also underscores the growing interplay between telecom networks and data centers. Latency-sensitive AI applications, such as autonomous systems and fraud detection, are driving demand for edge data centers closer to end-users. For telcos, delivering optimized AI services will likely depend on partnerships with edge computing players, as well as investment in AI-ready network architectures that support dynamic workload routing.

The Outlook: Operational AI and Telecom’s Next Frontier

The RCRTech report emphasizes that scaling AI is no longer a purely technical challenge but an operational one. As enterprises look to run AI systems more like industrial-scale utilities, telecom operators have a rare opportunity to align with broader infrastructure trends. However, the path will not be without obstacles. Experts caution that the volatility of energy markets, coupled with rising AI infrastructure costs, poses significant risks for operators already facing margin compression in their core connectivity businesses.

Still, the rapid evolution of AI infrastructure presents a call to action for the telecom sector to innovate beyond connectivity. As AI reshapes enterprise operations, telcos that invest in the right mix of network densification, AI workload orchestration, and edge partnerships will be well-placed to capture value from this next wave of digital transformation.

What’s your take on these infrastructure challenges? Could telcos emerge as key players, or will hyperscale clouds dominate the AI era? Share your insights below.