Over 72% of Traffic to DNS Root Servers is Junk: Understanding the Digital Garbage Crisis

Imagine navigating an Internet where seven out of every ten transactions involve unnecessary or irrelevant activity. That’s the reality facing the crucial Domain Name System (DNS) root servers, which serve as the backbone of online infrastructure. Recent studies reveal that over 72% of the traffic to DNS root servers consists of unwanted or illegitimate queries. These “junk” requests, often directed toward non-existent domain names, pose significant challenges to the stability, efficiency, and security of the Internet’s foundational systems. In this in-depth exploration, we’ll uncover the causes behind this digital congestion, its consequences, and the steps being taken to address it.

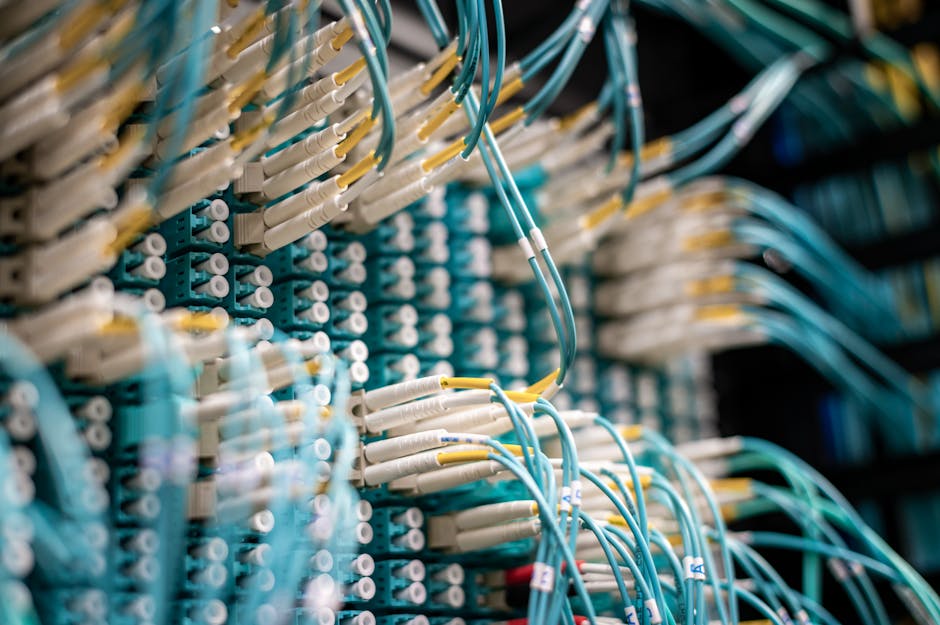

What Are DNS Root Servers and Why Are They Critical?

Pexels

The Domain Name System (DNS) acts as the Internet’s phonebook, translating human-readable domain names like google.com into numerical IP addresses that computers use to communicate. At the apex of this system lie DNS root servers, a distributed global network of 13 logical servers that manage the DNS root zone. These servers act as gatekeepers, directing traffic to appropriate top-level domains (TLDs) such as .com, .org, or geographic domains like .uk.

Every time a user enters a website address or accesses an online service, the request often relies on these root servers to route the query correctly. However, this critical role also makes them a target for high volumes of repetitive or unnecessary queries. Recent analysis of one such server, the b.root-server, highlights that over 72% of incoming traffic is essentially garbage, consisting of queries for non-existent or misconfigured domain names.

Why Does Junk Traffic Matter?

Pexels

At first glance, junk DNS traffic may seem like harmless background noise, but it has far-reaching implications. For one, processing such requests wastes valuable computational resources and bandwidth, detracting from the servers’ ability to handle legitimate queries effectively. Left unchecked, this digital overload can lead to slower loading times, degraded Internet performance, and even potential vulnerabilities that cyber attackers could exploit.

Studies suggest that much of this junk traffic comes from faulty configurations or improper implementations of DNS clients. For instance, non-existent domains or NXDOMAIN queries, often created by automated systems or poorly maintained networks, account for a substantial portion of the problem. As this traffic grows, addressing the root causes becomes increasingly urgent to preserve the Internet’s performance and reliability.

Turning Junk Traffic Into Opportunity

Pexels

While unwanted queries pose challenges, they also present an opportunity for deep analysis and improvement. By examining patterns in junk traffic, researchers can detect misconfigured systems, identify emerging threats, and refine DNS protocols to be more efficient and secure. Certain network providers, highlighted through analysis of Autonomous Systems (ASNs), are responsible for disproportionately high levels of junk traffic. These insights open the door for targeted collaboration and remediation efforts, significantly strengthening the Internet’s resilience.

Efforts to clean up DNS traffic extend across multiple layers of the Internet’s ecosystem. Proposed solutions involve validating queries locally, filtering invalid requests before they reach root servers, and improving the operational standards of root systems. Researchers like Dipsy Desai, a Ph.D. student and 2025 Pulse Research Fellow, are developing innovative approaches to pre-emptively resolve unwanted traffic at its source, signaling a promising shift toward sustainable DNS management.

Collaborative Solutions for a Resilient Internet

Pexels

Resolving the junk traffic problem requires a multi-pronged effort involving software developers, network operators, and standards organizations. Local-level query validation and better use of caching mechanisms can help reduce the burden on the root servers. Additionally, improving DNS protocols and working alongside organizations that generate high levels of NXDOMAIN traffic could lead to a more balanced global traffic distribution.

Ultimately, the ongoing fight against unwanted DNS traffic highlights how complex and interconnected the Internet truly is. As researchers, operators, and developers work collaboratively to bolster the resilience of root servers, users can expect faster, more secure digital experiences. For the Internet to thrive in the coming years, reducing the strain from digital “junk” isn’t optional—it’s essential.